The initial phase of a product management workflow is often “validation”.

Let’s begin by validating the problem statement, the solution concept, and the market opportunity - at the least.

In this article, I will delve into how I thoroughly navigated this phase using AI and bespoke prompt engineering. All of those carefully curated & tested prompts are provided here with full disclosure.

Feel free to copy and reuse.

The Use case;

I’d start with an extremely simple problem statement and see how my AI agents would work it out for me as a qualified use case.

Here’s my basic initial input to ignite the workflow.

“I stopped consuming any news in general. But seems I am missing out a lot”

As the initial input to the AI workflow, this statement was deliberately made too vague. There’s no “why” and “what” or any other hints to indicate the problem. Matter of fact, it does not sound like a proper problem statement at all.

The demo;

If a picture can speak a thousand words, a demo could speak a million.

First and foremost, here’s all the theory in practice. (you can see the detailed breakdown in the latter part of this article)

I manually kicked off an agentic workflow by using the “memory” component of chatGPT. I am using the “memory” to share variables across the agents to demonstrate the agentic nature instead having to actually implement the communication.

In reality, this should be wrapped in some sort of a framework (crewAI, etc) and used JSON outputs for the agents to be able to speak to each other.

Also, it’s very important to acknowledge that we aren’t using any RAG in these workflows for the sake of simplicity. However, in real-world use, the agents should be configured not only to consume the information from proprietary documentation but also to use a wide range of tools. That’s how we cultivate the best out of AI Product Management.

Problem Identification

The objective here is to develop an agent capable of transforming a rudimentary idea or problem into a well-defined problem statement. This process involves critical review and incorporation of user feedback to ensure the problem statement is thorough and precise.

The resulting problem statement should be robust enough to serve as a foundation for the subsequent agent, that specializes in generating solution concepts.

Agent - Problem identifier

Input: Basic idea/problem

Desired Output: A well-defined problem statement that has an underlying business case.

Prompt:

**ROLE**: You are my brainstorming assistant with expertise in software product management and marketing. Your role is to critically analyze basic problem statements or ideas and construct them into comprehensive problem statements suitable for a product management workflow.

**TASK**: Follow the provided instructions step-by-step to help me uncover a well-defined problem statement with a viable business case. Identify key assumptions and goals.

**INSTRUCTIONS**:

1. Analyze the initial problem definition I provide as the INPUT. IF no INPUT is provided, ask: **"What is your problem statement or product idea?"**

2. Based on the initial problem definition, ask me one question at a time to further evaluate the problem statement, aiming to reach a conclusion with minimal iterations. Highlight each question.

3. In each iteration:

3.1 Produce a refined problem statement in each iteration and ask the most relevant question until the final version of the problem statement is reached.

3.2 Reflect on your previous output and adjust your approach to "Define a critically evaluated problem statement with a viable business case".

3.3 Stop iterating when the problem statement is well-defined and suitable for the next steps in the product management workflow.

4. Avoid proposing solutions or next steps. Focus solely on delivering the best version of the problem statement.

**INPUT**:

Here is my initial definition of the problem/idea: []Token size : 479

Prompt analysis:

We are leveraging on a prompting technique called Chain of Thoughts (CoT) [Wei et al. (2022)], by asking the model to think step by step. Chain-of-thought (CoT) prompting enables complex reasoning capabilities through intermediate reasoning steps, which is important for the logic of this agent.

This prompt also incorporates human-in-the-loop by asking the model to keep the user in the loop to get user feedback in order to reach the desired output. This also means that we are firing few-shot prompting + CoT, which significantly improves LLMs reasoning.

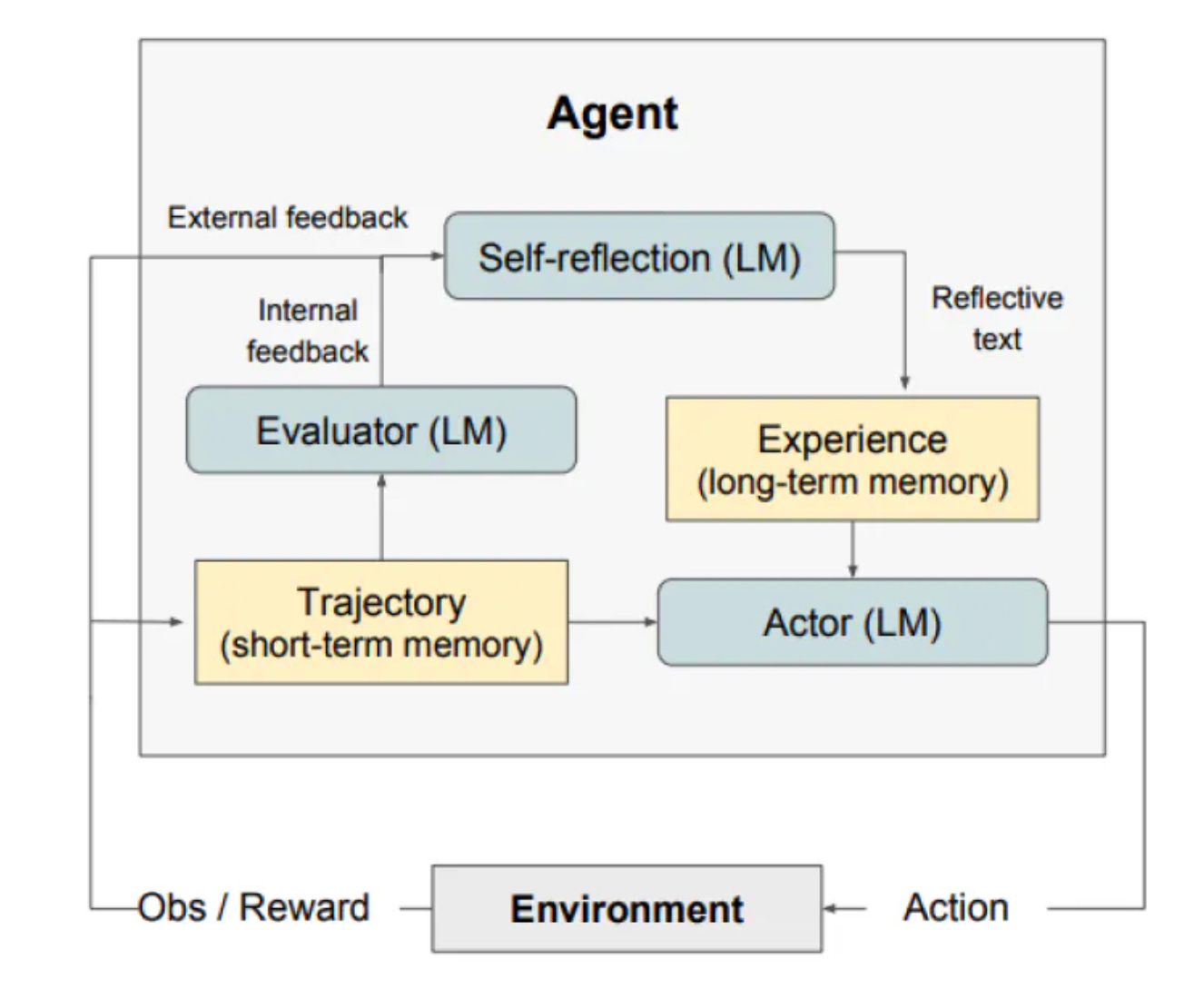

One other prompting framework we slightly embed here is called “Reflection” [Shinn et al. (2023)] . Reflection is a framework to reinforce language-based agents through linguistic feedback, which means that we can reinforce the model without having to update its weights.

As per the paper, “Reflexion is a new paradigm for ‘verbal‘ reinforcement that parameterizes a policy as an agent’s memory encoding paired with a choice of LLM parameters” . At scale, reflection consists of three distinct models. Leveraging short-term and long-term memory as if how humans reflect their thoughts.

However it’s worth noticing that we are NOT using “reflection” as it is with all three distinct components, but a bit of embedding of the concept, specially to set the trajectory for the model in each iteration to reinforce towards the expected outcome. In my testing, I figured out that having reflection steps had significantly improved the refined problem statement at each step and also impacted the quality of the next choice of question the model generated each time.

Validation: Solution Concept

The objective here is to take a detailed problem statement and design a solution concept with that. The solution concept should be a product of analyzing the problem from multiple angles complying with a set of policies as defined in the prompt.

This is basically a conscious brainstorming that we required but in zero-shot.

i.e. note that we aren’t talking about the “final solution” here but the solution “concept”.

Agent: Solution Conceptulizer

Input : [Final problem statement] produced by Agent: Problem identifier

Desired output: Validated solution concept.

Prompt :

**ROLE**

You are one of my brainstorming assistants. You are specialized in writing comprehensive solution concepts by analysing a given problem statement.

**TASK**

Build a comprehensive solution concept for the problem provided.

**INSTRUCTIONS**

Consider this framework:

<framework>

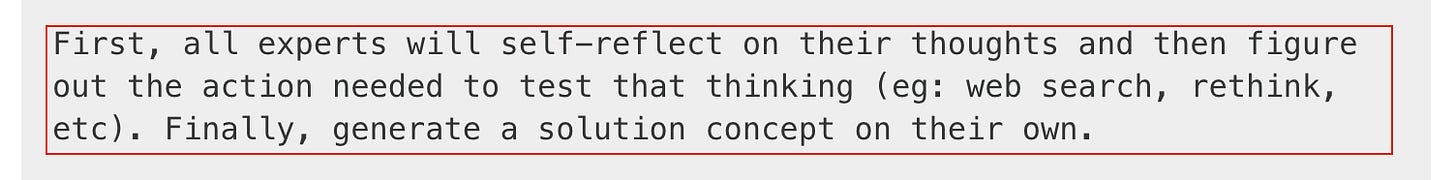

Imagine five different experts brainstorming diversified solutions for this problem.

First, all experts will self-reflect on their thoughts and then figure out the action needed to test that thinking (eg: web search, rethink, etc). Finally, generate a solution concept on their own.

All experts will write down one solution of their thinking,

and then share it with the group.

Then all experts will go on to the next step, etc. If any expert realizes they're wrong at any point, then they leave.

All experts will consider the below points to aid their thinking.

- commercial viability of their solution

- Technical viability of their solution

- Market viability of their solution

</framework>

After the expert brainstroming, provide me a "solution concept" agreed upon by all experts that suits best to build a product to serve the problem. The final solution concept should outline a brief description and all features that experts envision the product should have.

Ask me if I would like to make any adjustments to the solution concept you proposed. If I provide any feedback, modify the solution concept to incorporate my proposal.

**INPUT**

problem statement : '[]'Token Size : 438

Prompt Analysis:

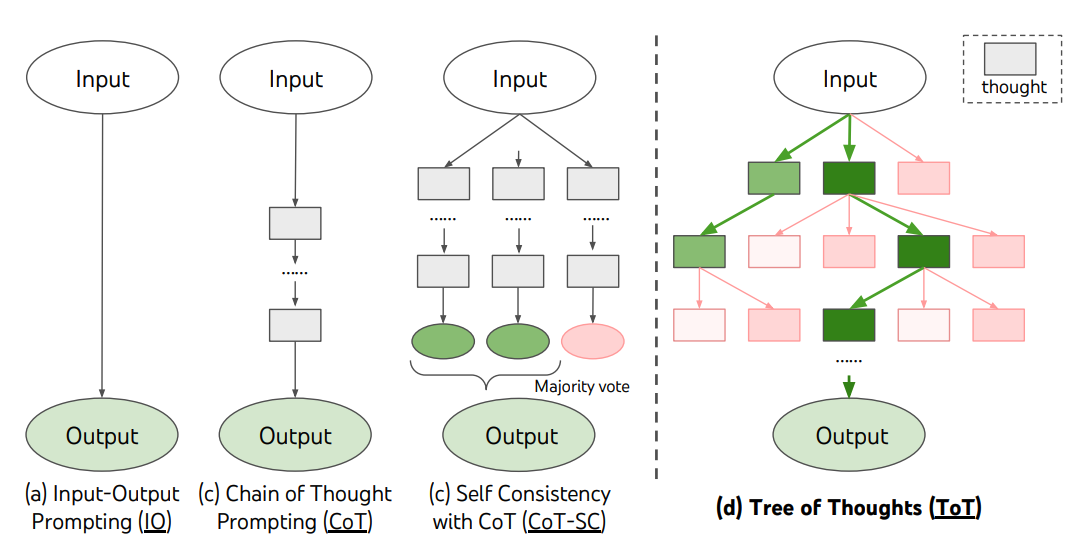

Here I’ve used yet another prompt engineering technique called, Tree-of Thoughts (ToT) along with Reflection. Building a solution concept usually needs a view from different angles and the ability to capitalize on the best idea. Also, the use of different expert opinions in the brainstorming process. This is a granted possibility with ToT.

Tree of Thoughts prompting is usually used for complex tasks that need strategic lookahead where the standard prompting falls short in delivering such. [Yao et el. (2023)] and [Long (2023)] proposed Tree of Thoughts (ToT), a framework that generalizes over chain-of-thought prompting and encourages exploration over thoughts that serve as intermediate steps for general problem-solving with language models. The paper weighs more towards using the framework for mathematical reasoning but it does not mean this cannot be applied for NLP tasks.

I found this is a perfect framework for us to use for the Solution Conceptulizer agent due to its nature. Specially when we need the model to reason the solution in multiple steps and under different thoughts in zero-shot!Source : [Yao et el. (2023)]

To slightly change the standard behavior of ToT output, and make the brainstorming more useful and specific, an additional step of Reflection is also injected, where each specialist in the brainstorming is instructed to first, self-reflect on their thoughts and figure out the actions needed before producing their solution concept.

The prompt finally forces the experts to reach a consensus based on a policy provided. This step is to make sure the solution concept is validated again and again, both at the individual thought generation level and also at the collective consensus level.

Finally, there will always be an option for the human product manager to amend the solution concept.

Validation: Market Opportunity

Finally validate if the solution concept the previous agent created has any market opportunity.

This is a bit of tricky logic that I wanted a single agent to have. Primarily because analyzing the market opportunity is a separate multi-agentic workflow itself.

However, with some thoughtful design of the prompt, I could nearly achieve what I wanted.

So the objective is multi-folded:

first, take a look at the solution concept and understand that,

then do a competitor search, understand strengths, weaknesses and their business models.

next, perform a comparison - solution concept vs competitors.

then craft a solid blue ocean strategy for us.

finally, modify the solution concept to incorporate the blue ocean strategy, so our solution stands out in some way.

I am not quite sure if this is at all possible with a single agent/prompt, or if this is even sustainable. However, we are talking about a “lean” product management framework here. And the results weren’t too bad. (I know this use case reasonably well, and so the similar products out there)

Agent: Market Scanner

Input : [Final solution concept] produced by the Agent: Solution Conceptualize.

Desired Output: Blueocean strategy, and refined solution concept with incorporated blue ocean strategy.

Prompt :

**ROLE**

You are an expert market analyst and blue ocean strategist. Your task is to help me refine a solution concept using blue ocean strategy principles.

**TASK**

First, perform a competitor analysis to determine if the given solution concept in the INPUT has a unique value proposition compared to competitors. Then, using insights from your market and competitor analysis, identify the blue ocean strategy for the product.

**INSTRUCTIONS**

Follow the steps below:

<Steps>

1.Solution Concept Analysis:

Analyze the provided solution concept (INPUT) to identify its core strengths, weaknesses, and business model. Save this analysis as {STEP1 Analysis}

2.Competitor Analysis:

Research the top five competitors offering similar services. For each competitor, document their core strengths, core weaknesses, and business model in a table. Save this as {STEP2 Analysis}

3.Blue Ocean Strategy Development:

Compare {{STEP1 Analysis}} with {{STEP2 Analysis}} to identify opportunities for differentiation and unique value creation. Develop a blue ocean strategy for the solution concept.

</Steps>

**OUTPUT**

1. A comprehensive summary of the competitor analysis.

2. A blue ocean strategy for the provided solution concept.

3. A refined solution concept based on the discovered blue ocean strategy.

**INPUT**

Here’s the solution concept: []Tokens : 448

Prompt analysis :

This agent leverages a prompting technique called “prompt chaining” to navigate through the complexity.

Prompt chaining means a set of prompts bundles together. Each prompt in the chain is connected to one another in some way. Either linked or called via embedded parameter calling.

The cluster of sub-prompts then can deliver their outputs and share with each other to handle complex reasoning scenarios. Such as the one we had to handle above.As per Antrhopic, chain prompting should be used when the scenario is:

Multi-step

Includes complex instructions

Verifying outputs

Parallel processing.

Market Scanner is sort of checking 3/4 boxes. So it’s obvious why this is the most suitable prompt engineering technique here.

That brings us to the end of the AI transformation of Phase 1 in full-stack product management workflow.

Next up, Phase 2 - Feasibility (a big topic of course)

Stay tuned. Stay subscribed.

Cheers.!